re:Invent 2019 Recap

Tags: aws reinvent recapIntroduction

re:Invent 2019 is the largest Amazon run event that occurs yearly in Las Vegas. Since 2011 it has been progressively scaling up in size and this year I was lucky enough to be able to attend for the first time.

I’m sure many readers have filled themselves in on the announcements remotely. As someone who in 2018 tried to digest what releases were important, I’ve decided to put together this post outlining the things that stood out to me.

Fargate

When Fargate launched at re:Invent 2017 there was a lot of excitement from anyone who had been running container workloads. Fargate allowed developers to freely scale their workloads UP and OUT with very minimal configuration and only pay for the time those containers were actually running.

A few years later and developers have been looking at Fargate for more features, and we were lucky enough this year to see a few of these launch!

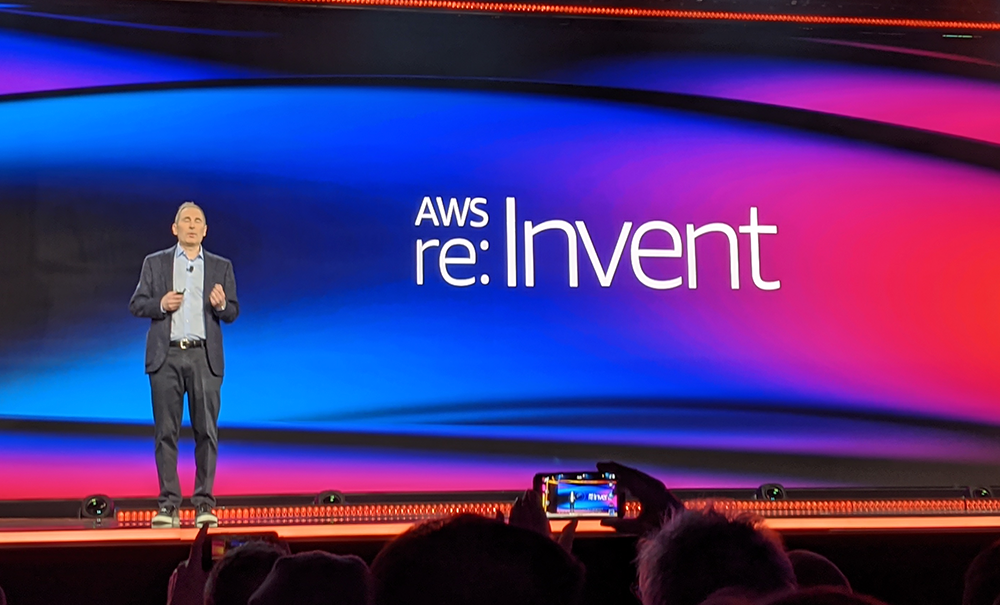

Fargate for EKS

Kubernetes has grown in popularity a lot over the last couple years, however there’s a lot of overhead required to manage a Kubernetes cluster. This overhead has become just another thing developers need to learn in order to deploy their applications.

Fargate for EKS is potentially a solution to this hurdle, allowing developers to deploy containers to EKS through Fargate.

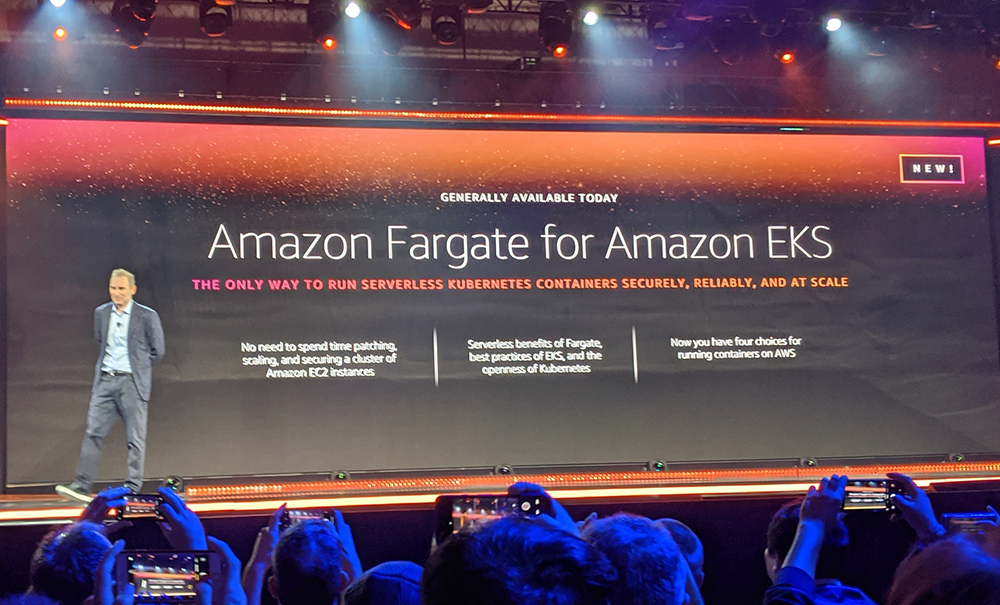

Fargate spot instances

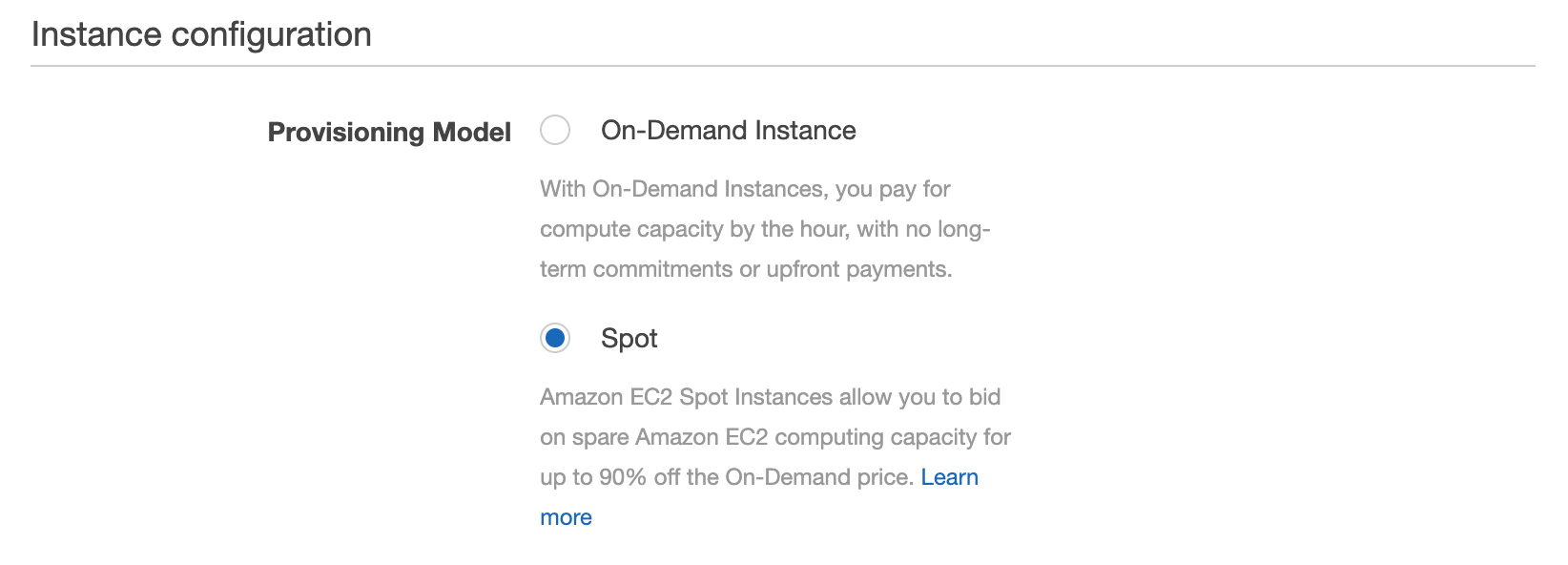

If you aren’t familiar with spot pricing then your wallet might become a lot happier after reading this. Spot instances in the context of EC2 is compute that can be taken away from you at any point in time, meaning that applications running on spot need to be interruption tolerant. The benefit however is you’ll receive a discount of up to 70% by running spot.

Fargate spot instances offer the same opportunity, however with your container workloads instead. You can setup a spot cluster by simply selecting spot under Instance Configuration.

SageMaker

re:Invent 2019 had a lot to offer in the machine learning & AI space whith most notable entries coming in under the existing SageMaker suite.

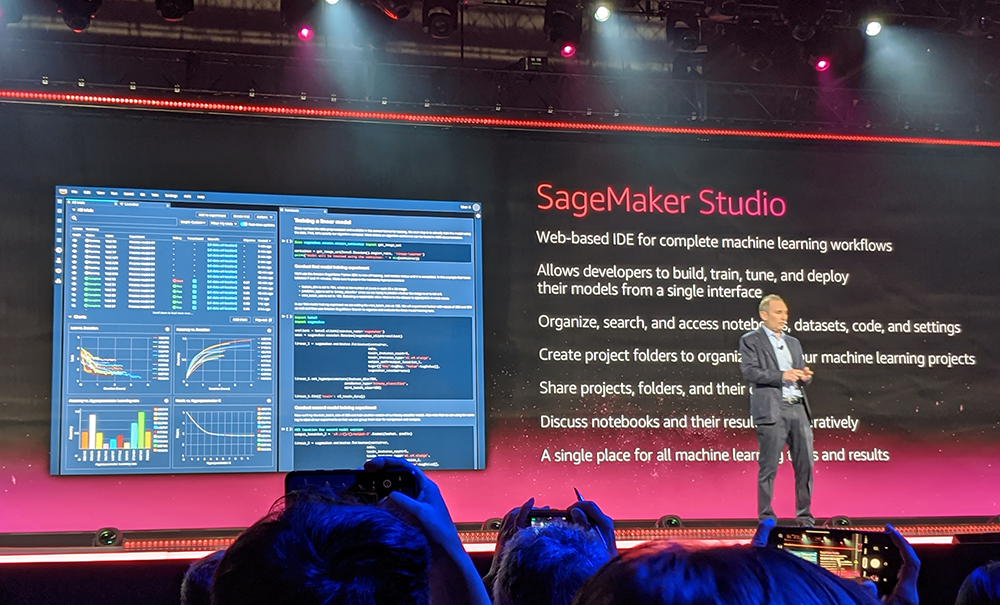

SageMaker Studio

SageMaker Studio was an exciting announcement as it marks a huge jump forward for the existing notebook platform AWS had to offer. SageMaker Studio presents itself as a single pane of glass for all ML workflows, and it isn’t lying about that.

In the past I’ve had criticism for the lacklustre effort put into making SageMaker as useful as the competitions offerings (Google Notebooks, JupyterLab). Most of my personal problems came from:

- No way to change instance type under notebook without shutting down experiments

- Very lacking visualisation / graphing capabilities

- Model debugging & monitoring (couldn’t easily run tensorboardX)

Luckily all the capabilities above (and more) are supported in SageMaker Studio.

SageMaker AutoPilot

Auto ML has been available on competing clouds for a while now (Custom Vision AI, Cloud AutoML) so it was expected that AWS would be launching their own player in the ring.

The idea behind Auto Machine Learning is that you are able to upload bulk data and with minimal direction on what features and characteristics you would like to see and the platform should be able to create a general model for you. It is usually very useful for quickly validating problems as it can setup some simple ML experiments for you without too much engineering work.

SageMaker AutoPilot took this existing idea and (hopefully) has improved on it with a number of extra features like:

- Automatic hyperparameter optimisation

- Easier distributed training

- Better algorithm selection for different data types and problem scopes:

- Linear regression

- Binary classification

- Multi-class classification

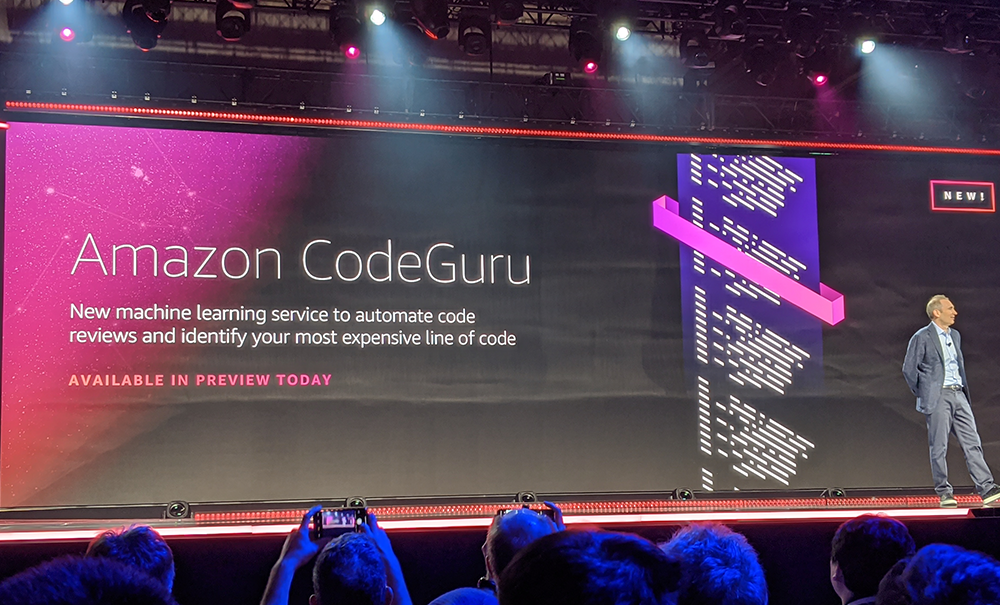

CodeGuru

CodeGuru is an automated, machine learning backed, code review system that was built from internal code review data within AWS.

The service has a lot of potential at face value, however there are a couple caveats to it currently:

- $0.75 per 100 lines of code scanned per month

- Java support only

- Due to the profiler requiring code to be put into your application, a custom SDK needs to be written for each language

These are two major drawbacks to the service, so I’m hoping it gets cheaper and supports more languages in the very near future. It would be fantastic to see support for languages like Typescript and Python given these are what we’re commonly using to develop software in recent years.

Amazon Kendra

Enterprise search is a hot trend that’s beginning to pop up lately. Search functionality which is comparable to Google could allow for more intuitive way for users to discover contextually relevant data within their enterprise domain.

This is where Amazon Kendra could be revolutionary; so I’m excited to give this serivce a try.

It should be noted that the service is not cheap, and only the Enterprise edition ($7.00 per hour) is available currently. This is likely due to Kendra being built ontop of a pretty expensive at scale indexing engine (Elasticsearch most probably), so costs are passed onto us for running a cluster.

Notable Entries

Since this list was very bias towards things that stood out to myself, I’ll drop some links below to other services that are definitely also on my radar.

- AWS Local Zones & Outposts

- As a lot of our work is out of Perth, WA; these offerings might be suitable for larger organizations who need the best latency within Western Australia

- Amazon Contact Lens

- As Connect users, this simple feature could offer a lot of insight into calls

- AWS DeepComposer

- A Web MIDI keyboard that lets you play with Generative AI

- Lambda Provisioned Concurrency

- Allows you to provision warm instances of Lambda to remove cold starts

- AWS Step Functions Express Workflows

- Effectively splitting Step Functions up into a cheap (but short lived) option called Express

Closing Thoughts

Attending re:Invent was a really rewarding experience that I don’t think can be replicated through the web sessions. Being able to chat with other passionate people who were in the same service spaces as me really ignited a fire in me to keep experimenting and work with the newest features.

I personally also had the opportunity as an AWS Community Hero to also attend a number of networking events. I especially enjoyed meeting the new machine learning & data heroes and finding out what parts of the new SageMaker offerings were most exciting to them.

If you have any questions or maybe you want to have a deeper chat about any of the new releases from re:Invent, please reach out to us on twitter @mechanicalrock_!